If you’ve been following the AI development space in early 2026, you’ve probably heard the name “Ralph Wiggum” more times than you’d expect for a character from The Simpsons. This isn’t about yellow animated characters—it’s about a technique that’s fundamentally changing how developers work with AI coding assistants.

Continue reading2026: The Year Coding Became Cheap and Audience Became the Moat

You probably found this blog through linkedin or x

Continue readingclaude cowork: the ai coworker we didn't know we needed

so anthropic just dropped something pretty interesting. Claude Cowork. and i think it might be a bigger deal than people realize

Continue readingBuilding SmallShop Part 1: Laying the Foundation

So i was helping a friend set up her online boutique on shopify. should have been simple right? haha

Continue readingLessons from Building Global Pet Sitter

i co-founded Global Pet Sitter with my friend Geert. two developers, over 10 years of housesitting experience between us, trying to build something transparent and community-driven. it started as a simple idea and honestly turned into one of the most educational experiences of my life

Continue readingGarmigotchi: A Tamagotchi That Lives Off Your Health Data

what if your smartwatch had a tiny creature living inside it. one that thrives when you’re healthy and dies when you neglect yourself

Continue readingBuilding Hyperscalper: A Fully Client-Side Crypto Trading Terminal

Hyperscalper is a professional cryptocurrency trading terminal I built for Hyperliquid DEX. What makes it unique is that it’s fully client-side - no backend servers, no middlemen. Your private keys never leave your browser.

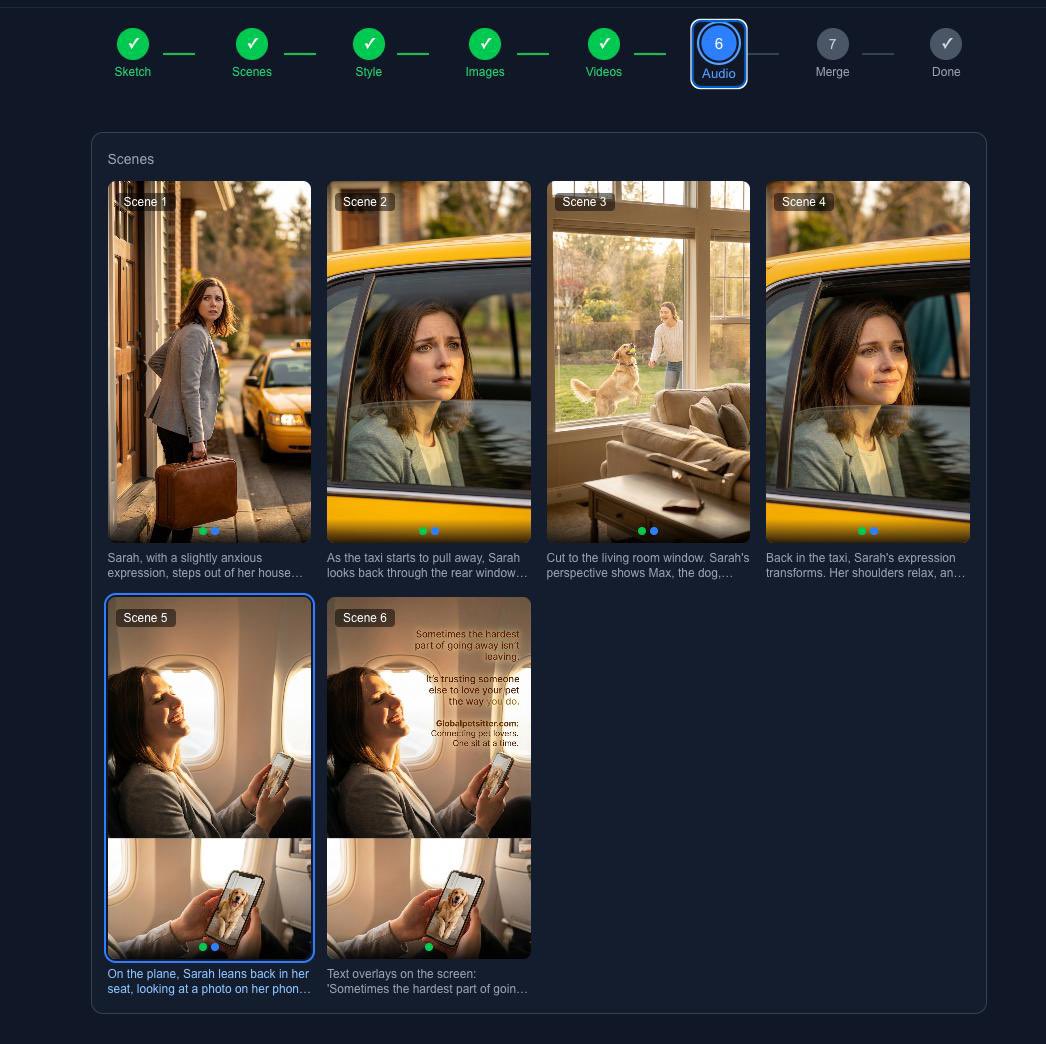

Continue readingi built a tool to generate video ads with ai

so i run globalpetsitter.com. connecting pet owners with sitters around the world. like any startup i need promo content, specifically video ads for social media. the problem is making even a simple 30 second ad takes forever

Continue readingWhat is llms.txt and Why Your Website Might Need One

so you’ve probably heard about llms.txt if you’ve been following the AI space lately. think of it like robots.txt but for LLMs

Decentralized Microservices With IPFS

What is IPFS?

Continue readingSetting Up a Private Npm Registry With Sinopia

When you have several front end projects going in the same company, and you are using a component based framework like Angular 2, it is important that you can reuse those components over all projects. It is possible to pay npm for private repositories, or to make use of npm link, but in this post, I will:

Continue readingA Post History Scroller

Quite a few months ago I was working on a personal project called: mailwall.me. The idea was a platform where you can share what you think someone will like using their mailwall.me address. As most people in the world have email, but not necessary a fb,twitter,whatsapp,etc. account, people can email media to people with a mailwall.me account. The message would get parsed, media extracted and displayed in the proper way on the platform. You could tie it up with IFTTT to mail you on social media events and it would automatically categorize everything accordingly. This history posts scroller is an artifact of this project. I was looking for a new way to create a historical overview of the messages.

Continue readingGetting Started With Microservices and Go

Traditionally applications have been built as monoliths; single applications which become larger and more complex over time, which limit our ability to react to change. Go is expressive, concise, clean, and efficient. Its concurrency mechanisms make it easy to write programs that get the most out of multicore and networked machines, while its novel type system enables flexible and modular program construction. Go compiles quickly to machine code yet has the convenience of garbage collection and the power of run-time reflection. It’s a fast, statically typed, compiled language that feels like a dynamically typed, interpreted language.

Continue readingFrom Gulp to Webpack

I recently started working on a big Angular 2 webapp. The company I joined has been working on this project since Oktober 2015. It is one of the first companies in Belgium that jumps on the ng2 bandwagon and it has been a joy and challenge to learn and work with this framework every day.

Continue readingAutomated E2E API Testing and Documentation for Node.js

This was my first time doing test driven API development in Node.js and I must say, I really enjoyed it. I used to fall back on a Chrome plugin called Postman a lot before getting used to Mocha and test driven development. It was a joy to write code for a test that described what the endpoint should do before actually coding the new endpoint. It made me think more about the code I was going to write and what it should do. It also happened sometimes that there would be a total API overhaul (like the implementation of softdeletes). And, man was I glad I had written unit tests for all my endpoints when that happened!

Continue readingAn Overview of the Blockchain Development Ecosystem

This is an effort in mapping out the current ecosystem of tools and platforms that facilitate development of ‘smart contracts’ or ‘Autonomous agents’ using blockchain technologies.

Continue readingSending Bitcoins using Bitcore and Node.js

This week I was asked to create a simple web app that allows users to send bitcoins from one address to another using node.js. After skimming through some online articles and forums I discovered Bitcore. I also looked at an alternative called BitcoinJs

Continue readingGetting Around the Product Variant Limitation in Shopify

Recently, I was struggling with some Shopify products that had more than 100 variant combinations. Shopify has a product variant limitation of 100 per product, whereas products themselves are unlimited. To get around the limitation I wrote a little Node script that creates a unique Shopify product for every variant and links the SKU’s dynamically to option selectors in the product pages.

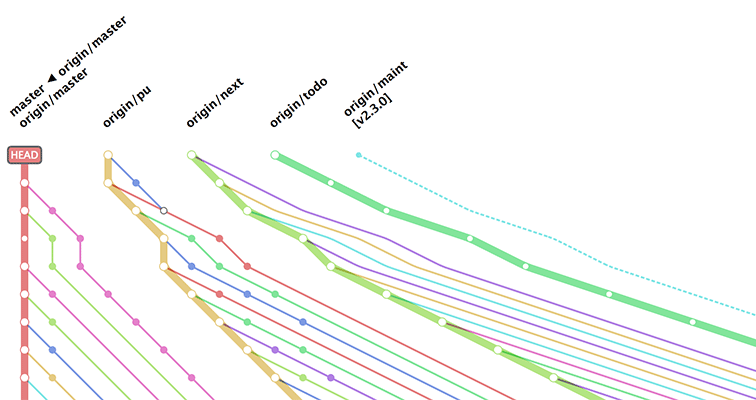

Continue readingGitup, the New Git Manager

Work quickly, safely, and without headaches. The Git interface you’ve been missing all your life has finally arrived.

Continue readingWebStorm vs. Atom

I have recently switched to the Atom editor and really love it. I love experimenting with new coding environments and so far PHP- and Webstorm have provided me with the best all round support for everything I make Web-related. But now… there is: Atom!!!

Continue readingExperimenting with Google Spreadsheets, Assemble.io and Internationalisation

Assemble.io is a really cool static page generator that ties neatly into Grunt, currently my favorite build tool. Assemble works with handlebar templates which allows you to slice up your HTML files into reusable pieces that get assembled at build. One of the cool features is that it allows you to tie in data coming from json or yaml files. If you are not familiar with assemble yet, please go through the documentation before continuing.

Continue reading